Google has announced that it will be adding the site speed or loading time of a website as a criteria for its  search rankings. this was indicated in Google’s last post in December and now its been formally announced by Amit Singhal, and Matt Cutts – Google's principal search quality team. Site speed as a new parameter reflects “how quickly a site responds to web requests" . This change is has been adopted to make this world a happier place

search rankings. this was indicated in Google’s last post in December and now its been formally announced by Amit Singhal, and Matt Cutts – Google's principal search quality team. Site speed as a new parameter reflects “how quickly a site responds to web requests" . This change is has been adopted to make this world a happier place

Google has provided a list of free tools to measure speed of a website. Tools like Google Pagespeed,Yahoo’s Yslow are provided to measure website’s speed. Google might use Google toolbar to measure website speed, but is it a reliable measure ? Further, the Google duo commented

search rankings. this was indicated in Google’s last post in December and now its been formally announced by Amit Singhal, and Matt Cutts – Google's principal search quality team. Site speed as a new parameter reflects “how quickly a site responds to web requests" . This change is has been adopted to make this world a happier place

search rankings. this was indicated in Google’s last post in December and now its been formally announced by Amit Singhal, and Matt Cutts – Google's principal search quality team. Site speed as a new parameter reflects “how quickly a site responds to web requests" . This change is has been adopted to make this world a happier place"Speeding up websites is important — not just to site owners, but to all Internet users. Faster sites create happy users and we've seen in our internal studies that when a site responds slowly, visitors spend less time there,"Faster websites reduce operating costs, improve user experience and overall make internet a more habitable place. But as there are always two faces of a coin,some webmasters are just not finding site speed a solid idea. What about websites that have advertisements ? they will obviously load slower than websites with no advertisements and with plain html. What about websites with flash content ? Worse even,the Google Adsense and Google Adwords code is known to slow a website. Would that ultimately affect a website’s rankings ?

Google has provided a list of free tools to measure speed of a website. Tools like Google Pagespeed,Yahoo’s Yslow are provided to measure website’s speed. Google might use Google toolbar to measure website speed, but is it a reliable measure ? Further, the Google duo commented

“While site speed is a new signal, it doesn't carry as much weight as the relevance of a page. Currently, fewer than 1% of search queries are affected by the site speed signal in our implementation and the signal for site speed only applies for visitors searching in English on Google.com at this point. We launched this change a few weeks back after rigorous testing. If you haven't seen much change to your site rankings, then this site speed change possibly did not impact your site.”

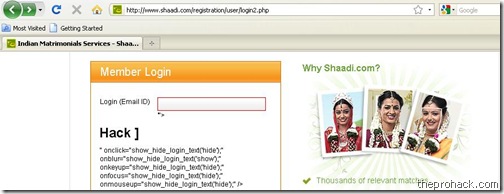

& landed directly (again) on XSS vulnerabilities. Being a fan of Rsnake, the God of XSS, I always wanted to learn a bit more about web app

& landed directly (again) on XSS vulnerabilities. Being a fan of Rsnake, the God of XSS, I always wanted to learn a bit more about web app